When you manage vast, complex networks of pipelines, simply keeping the lights on isn't enough. True operational excellence hinges on a deeper understanding: Measuring and Optimizing Pipeline Performance with precision. This isn't just about avoiding leaks or downtime; it's about transforming raw operational data into a powerful engine for efficiency, safety, and strategic growth.

It's a world where Pipeline Project Coordinators are no longer just scheduling maintenance; they're interpreting real-time data streams, leveraging AI to predict the future, and ensuring every decision is backed by robust analytics. The stakes are high, but so are the rewards for those who embrace the modern data-driven approach.

At a Glance: Your Takeaways on Pipeline Performance

- Data is your bedrock: Integrate diverse data streams (sensors, SCADA, maintenance logs) into a unified system.

- Real-time is essential: Continuous monitoring helps you detect anomalies and act before failures occur.

- Predictive power: Use AI and machine learning to anticipate issues, not just react to them.

- Scalability matters: Prepare for ever-increasing data volumes (projected to hit 182 zettabytes by 2025).

- Compliance by design: Robust data tracking isn't optional; it's a regulatory and safety imperative.

- People and tech go hand-in-hand: Build cross-functional teams and foster a data-centric culture for success.

- Expect big wins: Companies adopting these strategies see reductions in unexpected failures (over 30%), increased throughput (25%), and significant cuts in maintenance costs (23%).

The Foundation: Why Data-Driven Pipeline Management Isn't Optional

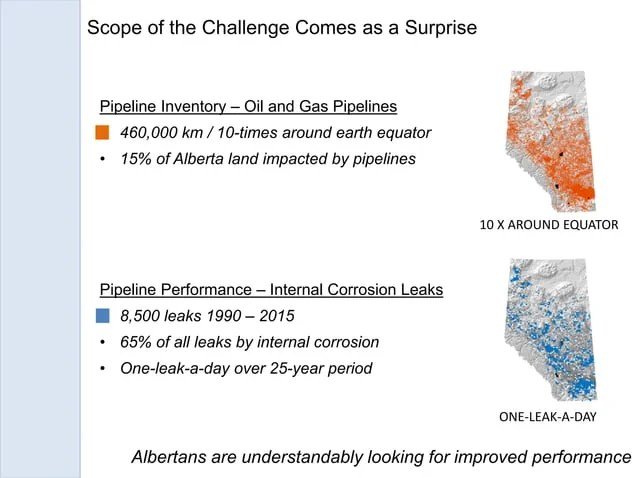

Pipelines are the arteries of modern industry, transporting vital resources across vast distances. Their efficiency directly impacts profitability, and their integrity is paramount for public safety and environmental protection. For Pipeline Project Coordinators, the challenge lies in orchestrating these complex operations, managing everything from scheduling and maintenance to safety oversight and performance reporting.

Traditionally, much of this work relied on periodic inspections, scheduled maintenance, and reactive problem-solving. But with the advent of modern business intelligence (BI) and data analytics, the game has changed. Raw operational data, once a mere record, is now a dynamic resource that, when properly harnessed, provides actionable insights and forms the basis for robust strategies. It helps you move from "what happened?" to "what will happen?" and "what should we do about it?".

Navigating the Data Deluge: Common Challenges in Pipeline Performance Analysis

Transforming a traditional pipeline operation into a data-driven powerhouse isn't without its hurdles. Many organizations face similar obstacles as they embark on this journey. Understanding these challenges is the first step toward overcoming them.

1. The Silo Effect: Data Integration Nightmares

Imagine trying to understand the health of an entire pipeline system when its various parts speak different languages. Sensors report in one format, SCADA (Supervisory Control and Data Acquisition) systems in another, and maintenance logs yet another. This fragmentation makes a holistic view almost impossible.

- The Problem: Managing diverse data streams from countless sources without a unified approach.

- The Solution: Implementing middleware platforms and mandating standardized data formats like JSON or XML. These technologies act as universal translators, bridging the gaps between disparate systems and creating a cohesive data environment.

2. The Time Lag: Missing Real-Time Insights

Pipeline systems are dynamic. Pressures fluctuate, temperatures shift, and flow rates change constantly. Relying on stale data means you're always a step behind, reacting to problems after they've escalated instead of preventing them.

- The Problem: A lack of constant, up-to-the-minute updates, hindering early anomaly detection and immediate corrective actions.

- The Solution: Investing in real-time monitoring technologies, which we'll explore in detail, ensures you have an accurate, current snapshot of your pipeline's health at all times.

3. The Crystal Ball Conundrum: Lack of Predictive Power

Historical data holds a wealth of information about past failures, maintenance cycles, and operational patterns. Without advanced analytical techniques, this treasure trove remains largely untapped, limiting your ability to forecast potential issues.

- The Problem: Difficulty sifting through vast historical data to recognize patterns and predict potential failures before they occur.

- The Solution: Adopting predictive analytics techniques, including sophisticated machine learning models, to uncover hidden trends and anticipate future events.

4. The Data Tsunami: Scalability Issues

The sheer volume of data generated by modern pipeline infrastructure is staggering and growing exponentially. By 2025, global data volumes are projected to reach an astounding 182 zettabytes. If your systems can't handle this scale, your analytics will quickly become overwhelmed and obsolete.

- The Problem: Managing massive, continuously expanding data volumes without the infrastructure to process and store them efficiently.

- The Solution: Building scalable data architectures, often leveraging cloud computing and distributed processing, designed to grow with your data demands.

5. Staying in Line: Regulatory Compliance Headaches

The pipeline industry is heavily regulated, and for good reason. Accurate data tracking and timely reporting aren't just good practice; they're legal requirements. Failure to comply can lead to hefty fines, reputational damage, and even operational shutdowns.

- The Problem: Ensuring accurate data tracking and timely reporting to meet strict industry standards and minimize risks.

- The Solution: Implementing automated data governance frameworks and reporting tools that guarantee data integrity and simplify compliance audits.

6. The Human Element: Organizational Readiness

Technology alone isn't a silver bullet. Even the most advanced analytics platform is only as good as the people using it. Building effective cross-functional teams with both domain expertise and data science knowledge, and navigating the cultural shift, are crucial.

- The Problem: Lack of a skilled workforce and resistance to change, hindering the adoption of new data-driven methodologies.

- The Solution: Investing in training, fostering a culture of continuous learning, and adopting organizational change management approaches like Agile methodology or Design Thinking to ensure smooth transitions.

The Performance Analysis Framework: Steps to Actionable Insights

Successfully measuring and optimizing pipeline performance involves a systematic approach, moving from raw data capture to sophisticated analysis and visualization.

Step 1: Data Collection — The Foundation of Understanding

Before you can analyze anything, you need reliable data. Modern pipelines are equipped with a vast array of sensors designed to capture every relevant metric.

- What to Collect: Sensors continuously capture metrics like flow rate, pressure, temperature, and leak detection signatures. This granular data provides the raw material for all subsequent analysis.

- How to Collect & Structure: Integrated data management systems, often built around a robust Data Dictionary, are essential for structuring this information. A Data Dictionary defines what each piece of data means, its format, and its relationships, ensuring consistency and clarity across the entire system.

Step 2: Data Preprocessing — Turning Raw into Refined

Raw data is rarely pristine. It's often inconsistent, incomplete, or contains errors. Before any meaningful analysis can occur, this data needs to be cleaned, sorted, and formatted.

- The Process: Sophisticated algorithms clean, sort, and format inconsistent raw data. This step is crucial for highlighting key performance metrics and removing noise. Think of it as refining crude oil into usable fuel.

- Tools for Efficiency: Tools featuring Bulk Operations functionality streamline this process, allowing you to apply cleaning and formatting rules across multiple datasets simultaneously, saving immense time and reducing manual errors.

Step 3: Data Analysis & Visualization — Unveiling the Story

With clean, structured data, you can finally begin to uncover patterns, identify trends, and derive actionable insights. But presenting this information effectively is just as important as the analysis itself.

- Deep Dive Analysis: Cleansed data is analyzed using various statistical and computational methods. Specialized tools, such as an Overall AI Report or a Pattern Report, can automatically identify significant trends and anomalies that human operators might miss.

- Visual Storytelling: Visual dashboards are your command center. Graphs, heat maps, and trend lines provide a clear, intuitive overview of operational efficiency. These visualizations empower Project Coordinators to quickly grasp complex information, spot emerging issues, and communicate findings effectively to stakeholders.

Advanced Strategies & Technologies: Elevating Pipeline Performance with AI

Moving beyond basic monitoring, the true power of data-driven pipeline management emerges when you integrate advanced strategies and cutting-edge technologies. These innovations allow for proactive intervention and significantly enhance decision-making.

Predictive Maintenance: Anticipating the Future of Your Assets

Imagine knowing a component is about to fail before it actually breaks down. That's the promise of predictive maintenance. By leveraging historical data and real-time monitoring, you can anticipate potential system failures and schedule maintenance proactively.

- How it Works: Machine learning models analyze vast datasets, learning the "signature" of healthy operation and identifying deviations that signal impending trouble. A Classification Report, for instance, can categorize potential failures by type and severity.

- The Impact: Companies implementing predictive maintenance can see up to a 30% reduction in maintenance costs and a 25% extension in asset lifespan. This shifts operations from reactive (fixing after it breaks) to proactive (preventing it from breaking).

Machine Learning (ML) Models: The Unseen Pattern Detectives

ML models are the brains behind advanced analytics, capable of processing colossal volumes of data and identifying intricate patterns that human operators simply cannot perceive.

- Power of Algorithms: Models like Random Forest can achieve impressive accuracy—up to 90% in predicting pipeline failures. These models continuously improve through feedback loops, learning from every new piece of data and every averted crisis.

- Beyond Human Limits: They highlight subtle shifts in pressure, temperature, or flow that collectively indicate a higher probability of failure, far beyond what simple thresholds can detect.

Real-time Monitoring Technologies: Your Eyes and Ears on the Ground

Continuous, instantaneous data is the lifeblood of optimized pipeline performance. Several technologies work in concert to provide this critical real-time awareness.

- IoT Sensor Networks: Deploying a dense network of pressure, temperature, and flow sensors provides continuous data streams. These IoT devices are the frontline data gatherers, allowing for quick identification of issues like leaks or blockages as they begin.

- Digital Twins: These are virtual replicas of physical pipeline systems, built from real-time data. A digital twin allows you to simulate various scenarios, test maintenance strategies, and predict how changes might impact the system—all without affecting the live operation. It's like having a perfect, risk-free sandbox for your pipeline.

- AI and ML Algorithms: Crucial for processing the vast quantities of data generated by IoT networks and digital twins. They excel at recognizing complex patterns and identifying anomalies that indicate potential problems.

- Edge Computing: For remote pipeline segments, sending all sensor data to a central cloud for analysis can introduce unacceptable latency. Edge computing brings the analysis closer to the data source, enabling real-time processing and decision-making directly at the remote location, significantly improving speed and responsiveness.

Risk-Based Maintenance Prioritization: Smart Spending, Smarter Outcomes

Not all assets are created equal in terms of risk. Predictive analytics enables you to intelligently prioritize resources, focusing maintenance efforts on the assets that pose the highest risk of failure or have the greatest impact on operations if they do fail.

- Strategic Resource Allocation: This approach, expected to be adopted by 70% of companies by 2025, can lead to significant cost reductions—potentially a 15% drop in operational expenses (OpEx) and a 10% reduction in personnel costs. It means fixing what matters most, when it matters most.

Real-time Dashboards: Your Operational Command Center

Effective decision-making requires immediate access to critical information. Real-time dashboards provide this at a glance.

- Dynamic Visuals: These dashboards offer continuously updated overviews, complete with automated alerts, trend graphs, and performance benchmarks. They are critical for timely decision-making, allowing Project Coordinators to spot deviations and trigger actions instantly.

- Insight Sharing: Functionalities like Report Assembly facilitate easy sharing of these critical insights across different departments, ensuring everyone from field technicians to executive management is on the same page.

Team Collaboration Tools: Bridging Insights with Action

Data insights are powerful only when they lead to action. Collaboration tools are essential for closing the loop between data analysis and operational execution.

- Unified Communication: Platforms like Team Chat and integrated Support AI bridge data insights with operational execution. When an anomaly is detected, these tools enable swift communication between analysts, field teams, and management, leading to rapid resolution. This is where your strategies for pipeline generation are put into action with immediate feedback loops.

The Payoff: Tangible Benefits and Measurable Outcomes

Integrating advanced data analytics, AI, and real-time monitoring isn't just about adopting new tech; it's about fundamentally transforming your pipeline operations for superior results. The benefits are clear and often dramatic.

- Improved Operational Efficiency: Streamlined processes, reduced manual interventions, and optimized resource allocation mean your pipeline runs smoother, with fewer bottlenecks.

- Reduced Downtimes: By predicting and preventing failures, you dramatically cut down on unplanned outages, ensuring continuous operation. Case studies show reductions in unexpected failures by over 30%.

- Lower Maintenance Costs: Predictive maintenance and risk-based prioritization mean you're no longer over-maintaining low-risk assets or reacting expensively to emergency repairs. Significant reductions (e.g., 23%) in maintenance costs are common.

- Enhanced Safety Records: Proactive anomaly detection and risk mitigation directly translate to a safer environment for your personnel, the public, and the environment.

- Increased Throughput: A more efficient, reliable pipeline can handle greater volumes without strain, boosting throughput by as much as 25% and increasing overall pipeline uptime by 15%.

These outcomes aren't just theoretical; they are proven results for organizations that commit to a data-driven approach to pipeline performance.

Best Practices for Pipeline Project Coordinators: Your Roadmap to Success

As a Pipeline Project Coordinator, you are at the forefront of this transformation. Here’s how you can lead the charge and ensure your organization capitalizes on these advanced strategies:

- Prioritize Scalable Systems: Start by building a data infrastructure capable of handling high-frequency, diverse data streams. Think big from day one, knowing data volumes will only increase.

- Implement Automated, Continuous Data Collection: Human error and delays are the enemies of real-time insights. Automate data collection from all sources to ensure accuracy and timeliness.

- Develop Uniform Metrics and KPIs: Standardize your performance indicators across all pipeline segments. This ensures you're comparing apples to apples and can gain a consistent view of performance.

- Utilize Integrated Analytics Platforms: Don't try to stitch together disparate tools. Invest in integrated analytics platforms, such as DataCalculus, that can centralize data, perform complex analysis, and provide actionable insights from a single interface.

- Foster Collaboration and Communication: Data insights are only useful if they reach the right people. Use tools like Team Chat and Admin Tools to seamlessly disseminate reports and insights to relevant teams, from field technicians to senior management. Encourage open dialogue between data scientists and operational staff.

- Champion a Data Culture: Advocate for continuous training and emphasize the importance of data literacy across your teams. Empower your staff to use the data to make better decisions every day.

The Horizon: Future Trends in Pipeline Performance Optimization

The journey of data-driven pipeline optimization is far from over. Several exciting trends are poised to further revolutionize how we manage these critical assets.

- Refined Predictive Analytics: Expect even more sophisticated AI and machine learning models. These will move beyond predicting basic failures to anticipating complex, multi-factor issues, offering hyper-localized and precise maintenance recommendations.

- Expanded IoT and Data Volumes: The proliferation of IoT devices will lead to an unprecedented explosion in data. This will provide even finer-grained detail about pipeline conditions, demanding more robust, real-time processing capabilities.

- Increased Data Accessibility and Scalability via Cloud Computing: Cloud platforms will continue to lower the barrier to entry for advanced analytics, offering scalable, flexible, and cost-effective solutions for data storage and processing, even for the largest pipeline networks.

- Cross-Sector Sharing of Best Practices: Industries will increasingly share insights and technological advancements in data analytics, accelerating innovation. Techniques perfected in one sector might quickly find application in pipeline management.

- Enhanced Diagnostics: Expect more advanced capabilities for immediate gap analysis and comprehensive data pipeline optimization, allowing for rapid identification and rectification of inefficiencies in data flow and operational performance.

These advancements will enhance real-time accuracy, drive deeper innovation in performance analysis, and ultimately ensure pipelines operate at peak efficiency and safety for decades to come.

Your Next Steps: Building a Smarter Pipeline

Optimizing pipeline performance with real-time data and AI isn't just about adopting new technology; it's about adopting a new mindset. It's a commitment to continuous improvement, proactive problem-solving, and leveraging every byte of data to make smarter, safer, and more profitable decisions.

Start small, learn fast, and scale deliberately. Begin by identifying key areas where better data could immediately impact safety or efficiency. Invest in pilot programs, nurture cross-functional expertise, and always prioritize clear communication. The pipeline of tomorrow is a smart pipeline, and the journey to build it starts today.